Stanford robotics research dates back more than 50 years. One of the early breakthroughs was Shakey, developed by Stanford-affiliated SRI in 1966, which resembled a wobbling tower of computer parts. Over time, designs have grown more slick and sophisticated. Today, Stanford robots include a humanoid deep-sea diver, a gecko-like climber, and a soft robot that grows like a vine.

This fall, the new Stanford Robotics Center opened, providing a space for robotics research to continue to blossom at Stanford Engineering. The center includes six bays for testing and showcasing robots, including areas that resemble kitchens, bedrooms, hospitals and warehouses. Stanford Engineering faculty are eager to use the space not only to advance their designs, but to work closer with colleagues. “We will be conducting our research side by side in an open space,” says Karen Liu, a professor of computer science. “We will have more research collaboration, because now we’re neighbors.”

Here is a brief glimpse into the work some of our faculty are doing in this space.

Renee Zhao: Soft robots for minimally invasive surgery

Human organs are soft and squishy. Medical devices generally are not. That’s a problem for making surgeries less invasive, as inserting a tube through a blood vessel or other bodily tract can be difficult and potentially damaging. “We’re interested in soft systems because in soft systems, you essentially have infinite degrees of freedom – every single material point can deform,” says Renee Zhao, an assistant professor of mechanical engineering. “It’s better at interacting with human beings because we have soft tissues.”

Using origami-like robots made of soft plastic and magnets, Zhao is developing tomorrow’s medical robots. Some of her designs take inspiration from the movements of animals, such as an octopus arm, an elephant trunk, and an inching earthworm.

Her latest project looks like a swimming, pill-sized cylinder with a propeller. Using a polymer replica of the brain’s blood vessels, she tested whether this swimmer could successfully navigate the twists and turns in order to shrink a blood clot to treat stroke. Researchers move the robot using a magnetic field and a joystick. In a future operating room, Zhao says doctors could track the robot through X-rays, allowing them to guide it to a blood clot. “One of the biggest challenges for procedures using interventional radiology is the trackability and navigation capability,” she says. By eliminating those constraints, she says, “We want to revolutionize the existing minimally invasive surgery.”

Karen Liu: Mimicking human movement to help us get around

Originally inspired by video game characters, Liu’s goal is to understand how we move. “The fundamental question is, I want to know if we can understand how the human body works to the point that we can recreate that,” she says.

To get there, she is teaching robots how to move more like us. Using various sensors that capture video, acceleration, torque and other variables, researchers gather data that can be put into “digital twins” – computer models that further build on that information to predict human-like movement on their own.

One application Liu is working on is a robotic exoskeleton that can predict when the user loses balance and activate when needed to avoid a fall. Learning from human motion is critical because this exoskeleton will have intimate knowledge of its wearer, gathered from sensors and machine-learning algorithms. “It’s a machine that only gives you assistance when you need it,” she says, made possible by a rich understanding of how humans and the individual wearer move.

Jeannette Bohg: Grasping how our hands manipulate objects

For robots to be functional in places like our homes, they need to be able to grab a variety of objects, which is more complicated than it might seem. For Jeannette Bohg, an assistant professor of computer science, the way we use our hands is a tantalizing puzzle.

Take, for example, setting a glass of water down on a nightstand. For starters, simply pinpointing a clear glass, seeing where the edges of the glass warp light, is a challenge for an AI robot. Then the robot arm must grasp the object with the right pressure: too hard and it might break, too gentle and it will slip. It has to account for the friction of the glass and any condensation making it slippery. “You cannot write down how exactly to grasp your glass in the way that you can precisely write down a chess game,” says Bohg. “We don’t have all the information about the environment and we can’t describe the interaction between our hands and these objects to the sufficient amount of precision.”

To solve this problem, Bohg relies on machine-learning approaches. Since it’s impossible to instruct a robot via code how to pick up an object, she instead demonstrates actions, recording data on vision and touch sensors. Then, over many examples, the AI learns how to perform the grasping action. “If we did have robots that could actually manipulate all these things, they would be very useful in so many different ways in our world,” says Bohg.

Shuran Song: Helping home robots generalize

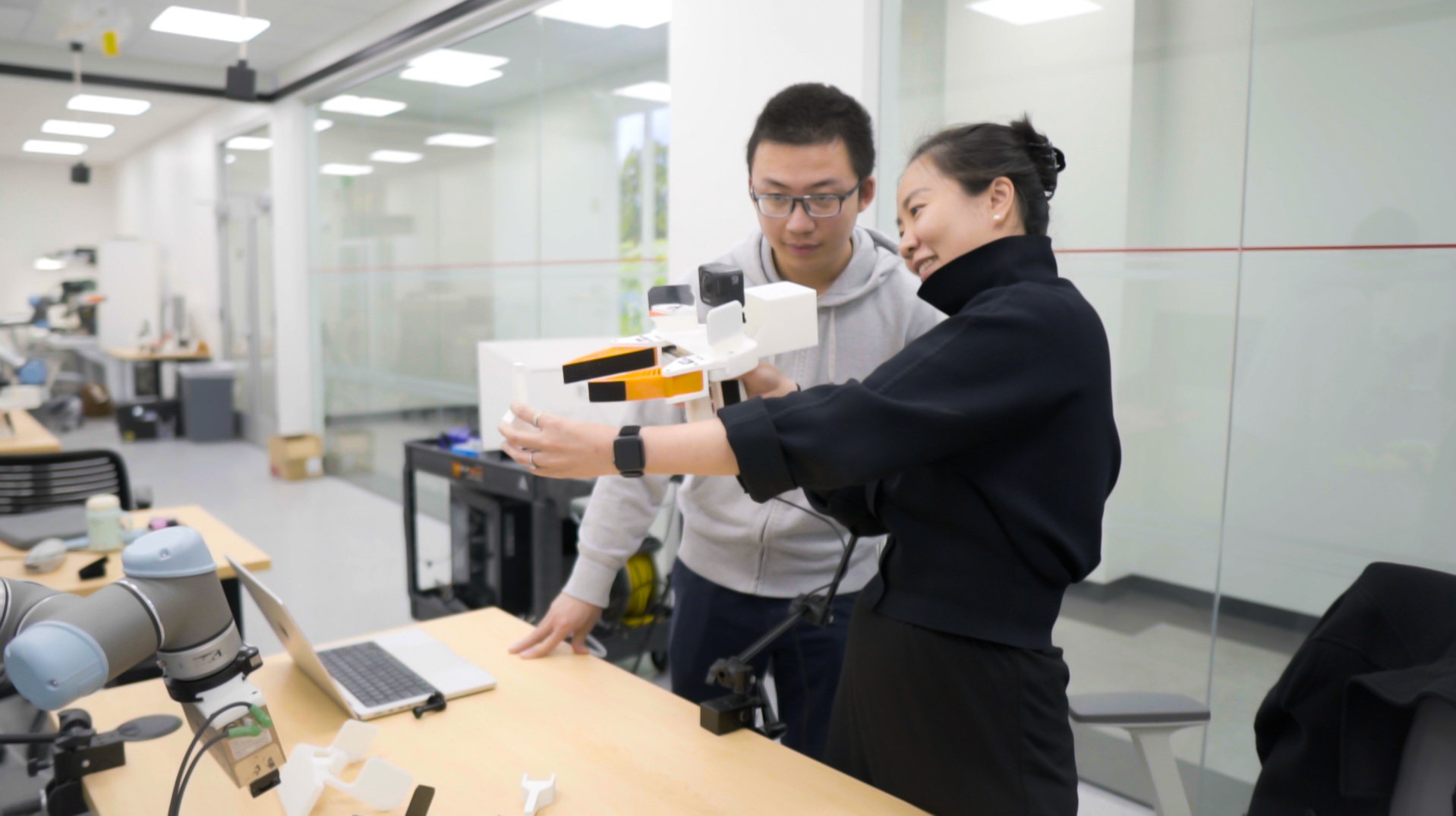

One bottleneck to building new robots is collecting training data. Traditionally, this would require a skilled technician to operate the robot through multiple demonstrations. But that process is very expensive and hard to scale, explains Shuran Song, assistant professor of electrical engineering.

Song is helping solve that problem through UMI, the Universal Manipulation Interface. UMI is essentially a mechanical claw with a fish-eye lens camera attached to it. The gripper has an easy learning curve – roboticists just need to squeeze a trigger to open and close it. The video data gathered by the camera can readily be translated for robots. About 300 recorded repetitions of washing dishes, for example, is enough to train a pair of robotic arms to independently clean up cookware. “This is a much easier way to scale up data collection,” says Song.

The gripper has been used to train a few robotics arms as well as a quadruped, dog-like robot. The portable tool can collect data in a wide variety of settings, unlocking what had been a barrier to building smarter robots – loads of training data. And more data, plus advances in machine learning, will help make it possible for robots to perceive their environment beyond a narrow set of tasks – similar to our own perception. Adds Song, “We’re trying to give the robot a similar ability to sense a 3D environment.”

Monroe Kennedy III: Better prosthetics through collaboration

Once a robot can act autonomously, making independent decisions, can it also be an effective teammate? To collaborate, whether you’re a human or robot, “you need to do things in a very integrated way,” says Monroe Kennedy III, an assistant professor of mechanical engineering, “which means you need to be able to model the behavior and intent of the teammate that you’re working with.”

One way he’s exploring this challenge is through designing an intelligent prosthetic arm. The prosthesis has 22 degrees of freedom – it can flex and bend in more ways than current prosthetics. But a user with an amputation at the shoulder would find such a device difficult to control on their own.

To overcome this challenge, Monroe is testing whether, through sensors that record the wearer’s movements, the prosthesis can learn more about its user, to the point where the robotic arm can infer their intent and help execute actions. “It can understand, from where you’re looking and how you’re providing innate signals, which task you would want to do,” says Kennedy.

Like many roboticists, Monroe has been asked whether robots will take away jobs from people. While he stresses that society must consider such risks and address them as much as possible, he’s overall optimistic about robots’ role in society. “My goal in robotics is to address the dull, dirty and dangerous tasks for humanity,” he says. “I think the benefits of that are immense.”

For more information

This story was originally published by Stanford Engineering.