Researchers in the emerging field of spatial computing have developed a prototype augmented reality headset that uses holographic imaging to overlay full-color, 3D moving images on the lenses of what would appear to be an ordinary pair of glasses. Unlike the bulky headsets of present-day augmented reality systems, the new approach delivers a visually satisfying 3D viewing experience in a compact, comfortable, and attractive form factor suitable for all-day wear.

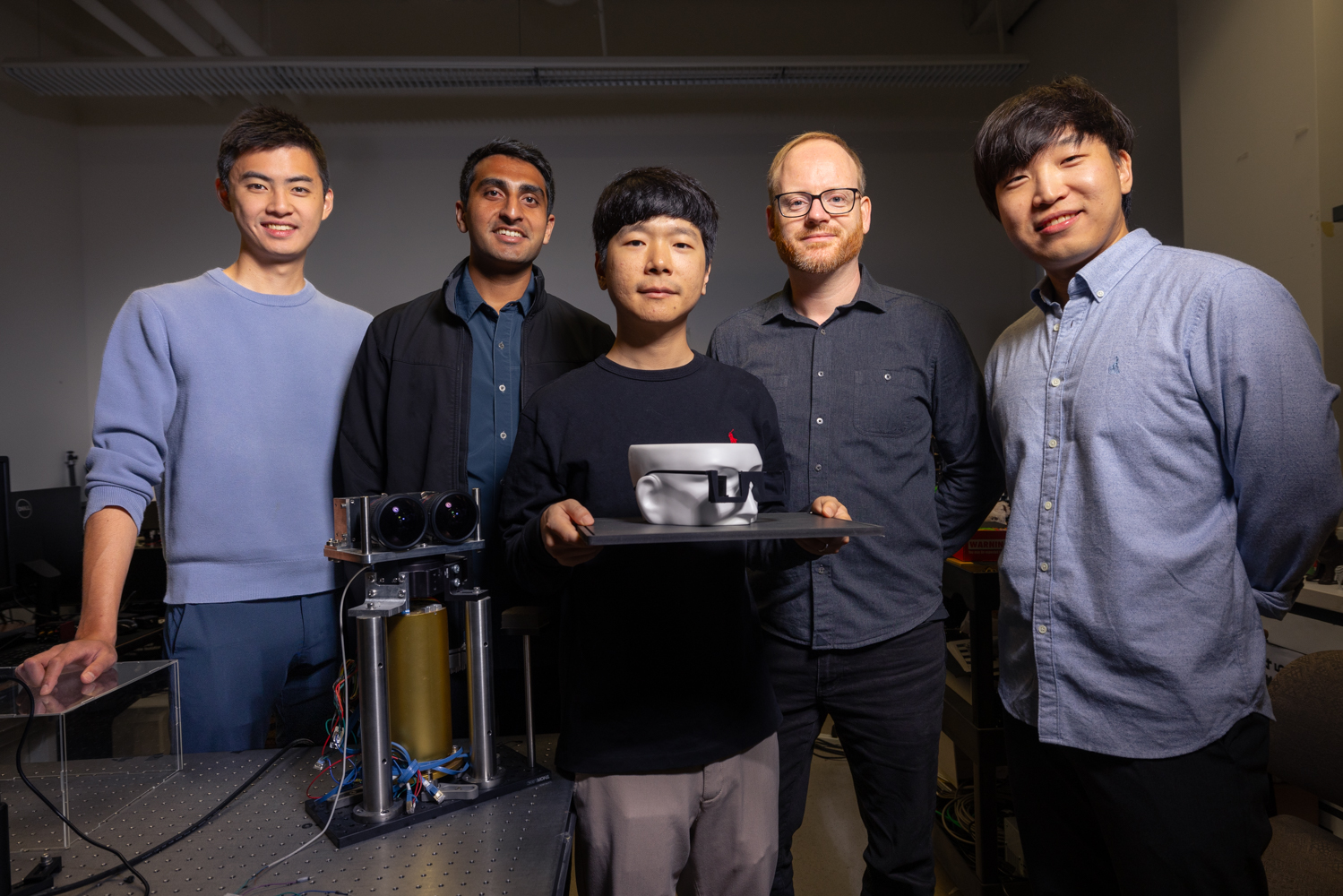

“Our headset appears to the outside world just like an everyday pair of glasses, but what the wearer sees through the lenses is an enriched world overlaid with vibrant, full-color 3D computed imagery,” said Gordon Wetzstein, an associate professor of electrical engineering and an expert in the fast-emerging field of spatial computing. Wetzstein and a team of engineers introduce their device in a new paper in the journal Nature.

Though only a prototype now, such a technology, they say, could transform fields stretching from gaming and entertainment to training and education – anywhere computed imagery might enhance or inform the wearer’s understanding of the world around them.

“One could imagine a surgeon wearing such glasses to plan a delicate or complex surgery or airplane mechanic using them to learn to work on the latest jet engine,” Manu Gopakumar, a doctoral student in the Wetzstein-led Stanford Computational Imaging lab and co-first author of the paper said.

New holographic augmented reality system that enables more compact 3D displays. | Andrew Brodhead

Barriers overcome

The new approach is the first to thread a complex maze of engineering requirements that have so far produced either ungainly headsets or less-than-satisfying 3D visual experiences that can leave the wearer visually fatigued, or even a bit nauseous at times.

“There is no other augmented reality system out there now with comparable compact form factor or that matches our 3D image quality,” said Gun-Yeal Lee, a postdoctoral researcher in the Stanford Computational Imaging lab and co-first author of the paper.

To succeed, the researchers have overcome technical barriers through a combination of AI-enhanced holographic imaging and new nanophotonic device approaches. The first hurdle was that the techniques for displaying augmented reality imagery often require the use of complex optical systems. In these systems, the user does not actually see the real world through the lenses of the headset. Instead, cameras mounted on the exterior of the headset capture the world in real time and combine that imagery with computed imagery. The resulting blended image is then projected to the user’s eye stereoscopically.

A closer look

Additional information about this advance is available at this website, created by the research team.

“The user sees a digitized approximation of the real world with computed imagery overlaid. It’s sort of augmented virtual reality, not true augmented reality,” explained Lee.

These systems, Wetzstein explains, are necessarily bulky because they use magnifying lenses between the wearer’s eye and the projection screens that require a minimum distance between the eye, the lenses, and the screens, leading to additional size.

“Beyond bulkiness, these limitations can also lead to unsatisfactory perceptual realism and, often, visual discomfort,” said Suyeon Choi, a doctoral student in the Stanford Computational Imaging lab and co-author of the paper.

Killer app

To produce more visually satisfying 3D images, Wetzstein leapfrogged traditional stereoscopic approaches in favor of holography, a Nobel-winning visual technique developed in the late-1940s. Despite great promise in 3D imaging, more widespread adoption of holography has been limited by an inability to portray accurate 3D depth cues, leading to an underwhelming, sometimes nausea-inducing, visual experience.

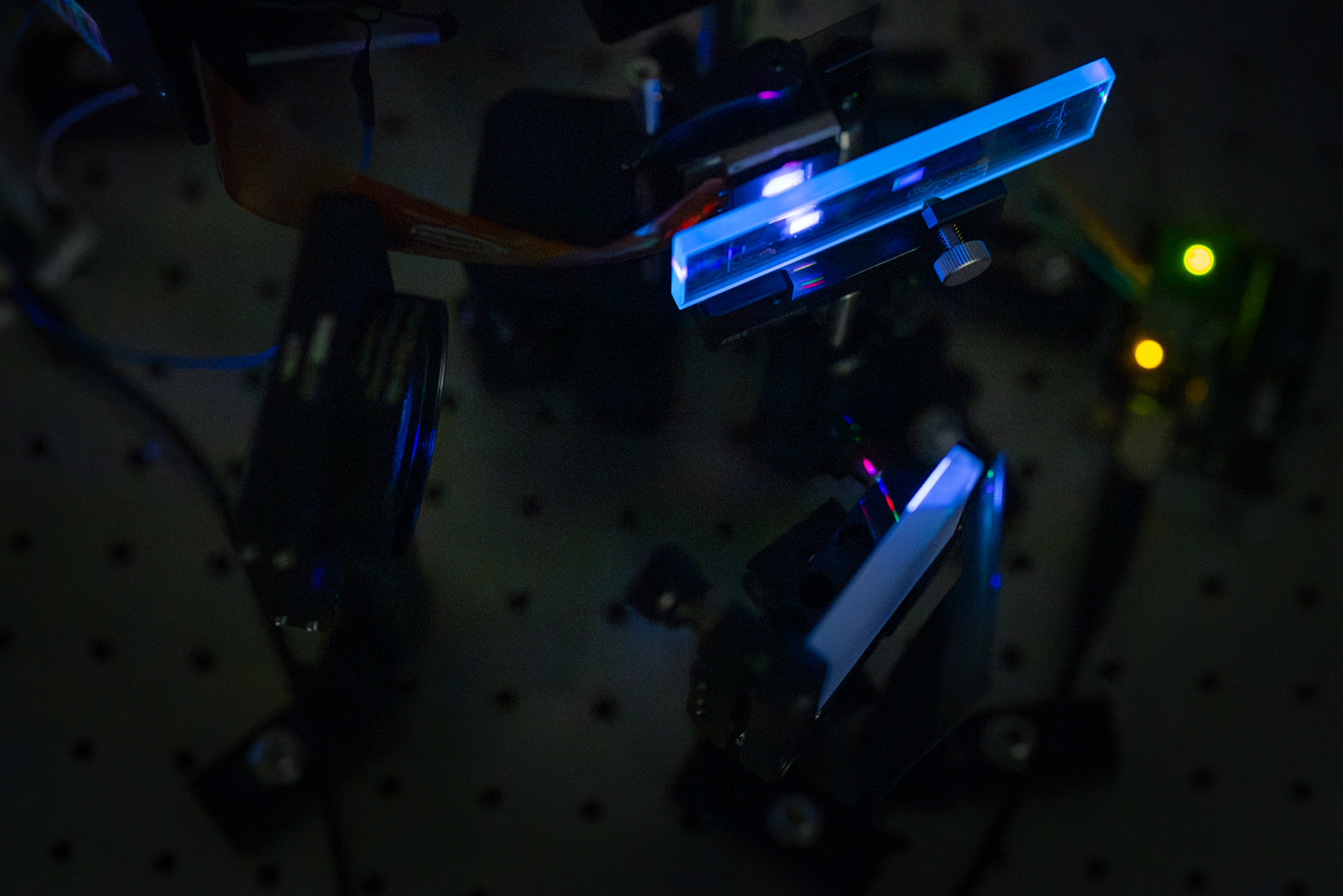

The Wetzstein team used AI to improve the depth cues in the holographic images. Then, using advances in nanophotonics and waveguide display technologies, the researchers were able to project computed holograms onto the lenses of the glasses without relying on bulky additional optics.

A waveguide is constructed by etching nanometer-scale patterns onto the lens surface. Small holographic displays mounted at each temple project the computed imagery through the etched patterns which bounce the light within the lens before it is delivered directly to the viewer’s eye. Looking through the glasses’ lenses, the user sees both the real world and the full-color, 3D computed images displayed on top.

Using nanophotonic technologies called metasurface optics, the researchers designed and fabricated a novel waveguide design that can relay 3D hologram information of RGB visible light into a single compact device with high transparency. These nanophotonic waveguide samples were fabricated in-house at Stanford Nanofabrication Facility and Stanford Nano Shared Facilities. | Andrew Brodhead

Life-like quality

The 3D effect is enhanced because it is created both stereoscopically, in the sense that each eye gets to see a slightly different image as they would in traditional 3D imaging, and holographically.

“With holography, you also get the full 3D volume in front of each eye increasing the life-like 3D image quality,” said Brian Chao, a doctoral student in the Stanford Computational Imaging lab and also co-author of the paper.

The ultimate outcome of the new waveguide display techniques and the improvement in holographic imaging is a true-to-life 3D visual experience that is both visually satisfying to the user without the fatigue that has challenged earlier approaches.

“Holographic displays have long been considered the ultimate 3D technique, but it’s never quite achieved that big commercial breakthrough,” Wetzstein said. “Maybe now they have the killer app they’ve been waiting for all these years.”

For more information

Additional authors are from The University of Hong Kong and NVIDIA. Wetzstein is also member of Stanford Bio-X, the Wu Tsai Human Performance Alliance, and the Wu Tsai Neurosciences Institute.

This research was funded by a Stanford Graduate Fellowship in Science and Engineering, the National Research Foundation of Korea (NRF) funded by the Ministry of Education, a Kwanjeong Scholarship, a Meta Research PhD Fellowship, the ARO PECASE Award, Samsung, and the Sony Research Award Program. Part of this work was performed at the Stanford Nano Shared Facilities (SNSF) and Stanford Nanofabrication Facility (SNF), supported by the National Science Foundation and the National Nanotechnology Coordinated Infrastructure.

Jill Wu, Stanford University School of Engineering: (386) 383-6061; jillwu@stanford.edu