Your friend texts you a photo of the dog she’s about to adopt but all you see is a tan, vaguely animal-shaped haze of pixels. To get you a bigger picture, she sends the link to the dog’s adoption profile because she’s worried about her data limit. One click and your screen fills with much more satisfying descriptions and images of her best-friend-to-be.

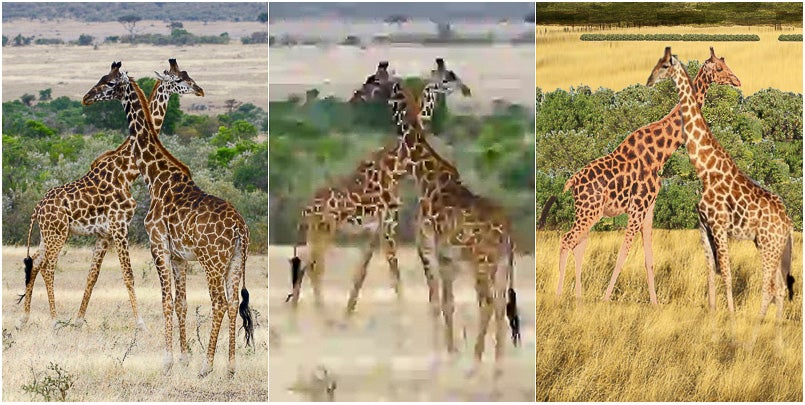

Given the image on the left, two study participants made the reconstruction on the right. People preferred their reconstruction to the image at the center, a highly compressed version of the original with a file size equal to the amount of data the participants used to make their reconstruction. (Image credit: Ashutosh Bhown, Soham Mukherjee and Sean Yang)

Sending a link instead of uploading a massive image is just one trick humans use to convey information without burning through data. In fact, these tricks might inspire an entirely new class of image compression algorithms, according to research by a team of Stanford University engineers and high school students.

The researchers asked people to compare images produced by a traditional compression algorithm that shrink huge images into pixilated blurs to those created by humans in data-restricted conditions – text-only communication, which could include links to public images. In many cases, the products of human-powered image sharing proved more satisfactory than the algorithm’s work. The researchers will present their work March 28 at the 2019 Data Compression Conference.

“Almost every image compressor we have today is evaluated using metrics that don’t necessarily represent what humans value in an image,” said Irena Fischer-Hwang, a graduate student in electrical engineering and co-author of the paper. “It turns out our algorithms have a long way to go and can learn a lot from the way humans share information.”

The project resulted from a collaboration between researchers led by Tsachy Weissman, professor of electrical engineering, and three high school students who interned in his lab.

“Honestly, we came into this collaboration aiming to give the students something that wouldn’t distract too much from ongoing research,” said Weissman. “But they wanted to do more, and that chutzpah led to a paper and a whole new research thrust for the group. This could very well become among the most exciting projects I’ve ever been involved in.”

A less lossy image

Converting images into a compressed format, such as a JPEG, makes them significantly smaller, but loses some detail – this form of conversion is often called “lossy” for that reason. The resulting image is lower quality because the algorithm has to sacrifice details about color and luminance in order to consume less data. Although the algorithms retain enough detail for most cases, Weissman’s interns thought they could do better.

In their experiments, two students worked together remotely to recreate images using free photo editing software and public images from the internet. One person in the pair had the reference image and guided the second person in reconstructing the photo. Both people could see the reconstruction in progress but the describer could only communicate over text while listening to their partner speaking.

The eventual file size of the reconstructed image was the compressed size of the text messages sent by the describer because that’s what would be required to recreate that image. (The group didn’t include audio information.)

The students then pitted the human reconstructions against machine-compressed images with file sizes that equaled those of reconstruction text files. So, if a human team created an image with only 2 kilobytes of text, they compressed the original file to the same size. With access to the original images, 100 people outside the experiments rated the human reconstruction better than the machine-based compression on 10 out of 13 images.

Blurry faces OK

When the original images closely matched public images on the internet, such as a street intersection, the human-made reconstructions performed particularly well. Even the reconstructions that combined various images often did well, except in cases that featured human faces. The researchers didn’t ask their judges to explain their ranking but they have some ideas about the disparities they found.

“In some scenarios, like nature scenes, people didn’t mind if the trees were a little different or the giraffe was a different giraffe. They cared more that the image wasn’t blurry, which means traditional compression ranked lower,” said Shubham Chandak, a graduate student in Weissman’s group and co-author of the paper. “But for human faces, people would rather have the same face even if it’s blurry.”

This apparent weakness in the human-based image sharing would improve as more people upload images of themselves to the internet. The researchers are also teaming up with a police sketch artist to see how his expertise might make a difference. Even though this work shows the value of human input, the researchers would eventually try to automate the process.

“Machine learning is working on bits and parts of this, and hopefully we can get them working together soon,” said Kedar Tatwawadi, a graduate student in Weissman’s group and co-author of the paper. “It seems like a practical compressor that works with this kind of ideology is not very far away.”

Calling all students

Weissman stressed the value of the high school students’ contribution, even beyond this paper.

“Tens if not hundreds of thousands of human engineering hours went into designing an algorithm that three high schoolers came and kicked its butt,” said Weissman. “It’s humbling to consider how far we are in our engineering.”

Due to the success of this collaboration, Weissman has created a formal summer internship program in his lab for high schoolers. Imagining how an artist or students interested in psychology or neuroscience could contribute to this work, he is particularly keen to bring on students with varied interests and backgrounds.

Lead authors of this paper are Ashutosh Bhown of Palo Alto High School, Soham Mukherjee of Monta Vista High School and Sean Yang of Saint Francis High School. Weissman is also a member of Stanford Bio-X and the Wu Tsai Neurosciences Institute.

This research was funded by the National Science Foundation, the National Institutes of Health, the Stanford Compression Forum and Google.

To read all stories about Stanford science, subscribe to the biweekly Stanford Science Digest

Media Contacts

Taylor Kubota, Stanford News Service: (650) 724-7707, tkubota@stanford.edu