|

November 19, 2012

Stanford professor correctly called 332 electoral votes for Obama

Political science Professor Simon Jackman's Pollster blog used over 1,200 polls to call the election with remarkable precision. By Max McClure

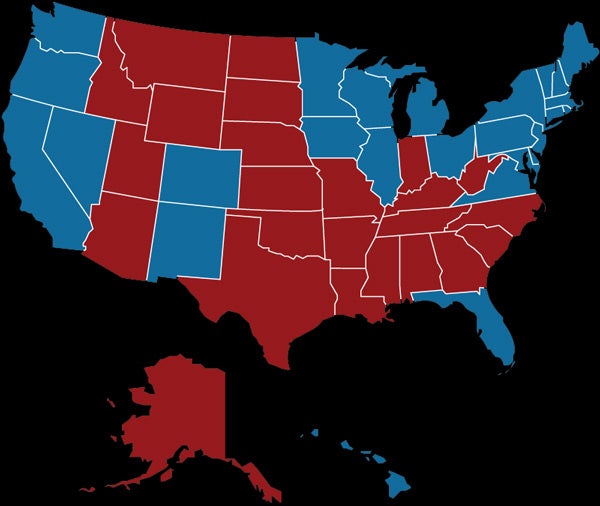

Results of the Nov. 6 presidential election were accurately forecast by Stanford political scientist Simon Jackman. (Illustration: Dr_Flash / Shutterstock)

Barack Obama wasn't the only victor in this last presidential election. In the high-profile battle to predict the race for the White House, data scientists resoundingly defeated the more traditional political oracles that typically dominate political coverage.

Stanford political science Professor Simon Jackman, from his Pollster blog on the Huffington Post, used reams of polls to correctly call 51 out of 51 states (including the District of Columbia). He wasn't alone: the New York Times' Nate Silver, Princeton Associate Professor of molecular biology and neuroscience Sam Wang and Drew Linzer, an Emory University political science assistant professor and Stanford visiting assistant professor, all used similar methods to achieve 100 percent accurate predictions.

"We ended up being right," Jackman said. "And not just right, but staggeringly right."

Meanwhile, talking heads, and even political scientists making use of economic and social indicators, were left describing the election as a "toss-up."

A clear outcome

Jackman has been predicting elections with his statistical model in one form or another since 2000, though never in such a high-profile way.

Intensive state-level polling has become the norm since the 2000 Bush-Gore race brought home the importance of the Electoral College. Jackman's model took in this wealth of state-level data, correcting for differing polling sample sizes as well as "house effects" – biases intrinsic to each polling firm, determined by how that firm's numbers compare to the average across polls. The model was continually updated as this new information came in.

When it came to actually estimating candidates' support in an individual state, however, Jackman didn't only use polls from that state.

Historical data show that certain states tend to "move together," following the same polling trends. These tend to be regions, like the Pacific West or the Deep South. Similarly, certain states – Ohio and Michigan, for instance – tend to track closely with national polls.

Taking these linkages into account vastly increased the predictive power of the model.

In the months leading up to the election, Jackman had Obama as a strong favorite. By Nov. 6, after incorporating more than 1,200 polls into the model, he gave Obama a 91.4 percent chance of victory.

"The outcome of the election was actually pretty clear to people bothering to look at public polling," said Jackman.

Bring data

Beyond the growing prominence of state-level polls, there's been a cultural shift over the last decade that made this election cycle one for Big Data. The very existence of a "data scientist" profession is a recent development.

"Data analysis has been having a real moment in the sunshine," Jackman said. "It's a movement that's touching every aspect of life, how governments or businesses or sports teams make decisions."

Nevertheless, the poll analysts took flak for their predictions. Nate Silver, in particular, was derided as being "left-wing" and a "joke." New York Times columnist David Brooks said that when pollsters make projections, "they're getting into silly land."

But the agreement and accuracy of the poll-based prediction models stood in marked contrast with the success of other predictions.

Methods based on economic "fundamentals," such as unemployment and income growth, are playing "a somewhat different game," Jackman explained – they concentrate on nationwide, rather than state-level, results and are theoretically capable of making predictions far in advance of an election.

Their success this year, however, was mixed. Political science professors Kenneth Bickers of the University of Colorado-Boulder and Michael Berry of the University of Colorado-Denver are cases in point. They incorrectly predicted a Romney victory based on the nation's high unemployment and stagnant income growth.

"The ability of that approach to generate precise predictions is just grossly limited by the amount of data they have," said Jackman. "It's tough to say something authoritative about the election when we've just gone from data point 15 to data point 16."

The gut-feeling pundits fared even worse, with talking heads like Dick Morris making dramatically incorrect predictions of Republican landslides. Of course, TV personalities make no pretense of scientific accuracy. "A lot of it's entertainment," said Jackman.

"But I think we're closer to the day when a pundit will say X, and the host will turn and say, 'What evidence do you have?' "

And on that day, elections coverage may finally obey the dictum of the data scientist, according to Jackman: "In God we trust. All others must bring data."

-30-

|